UPDATE #2: September 6, 2010

At noontime Eastern, I heard from Naoko again and she confirmed robots.txt has been upgraded site-wide on WordPress.com!

I had to change the privacy settings on all my blogs — and then back again to “public” — to force the new robots.txt file to update. The plan worked.

All 13 public blogs are now set and updated and ready for Google and the rest of the indexed search world to remove our proprietary Movable Type search results.

Here’s a screenshot of the new robots.txt file disallowing the “/cgi-bin/” directory. I highlighted the new addition:

Yay, WordPress.com!

THANK YOU from prying us from the rock!

UPDATE:

The moment I published this article today at 12:24pm Easter time, I followed up with WordPress.com support and gave them the link to this article in an attempt to better explain — with screenshots — the problem I was trying to solve.

At 2:12pm — less than two hours after I wrote to WordPress.com — Naoko replied:

Hi there,

I was waiting for this to actually go live, but a change has been made in our code.

User-agent: *

Disallow: /cgi-bin/Will be added to robots.txt (not visible yet, I need to check back with the developer).

Fantastic news! That solves my proprietary Movable type search results problem across all 13 of my public WordPress.com blogs! Here is my reply:

Hi Naoko!

Oh, that’s great news! Is this change on a per-blog basis, or is it site wide?

If it’s side wide, are there plans to include robots.txt proprietary search disallows for the other blogging services?

I will update my article to reflect the information you provide.

Thanks!

Best,

db

I will keep you updated!

I don’t see the “/cgi-bin/” disallow yet on any of my blogs in robots.txt, but the moment it goes live, I will go back to Webmaster Tools and specifically ask that the “/cgi-bin/” directory be removed now and forever from all my blogs.

As well, because of this robots.txt disallow addition, I will now be able to effectively venture into Yahoo! and Bing to see if I can get the same directory deleted in those services for all my blogs.

Thank you WordPress.com Gods!

ORIGINAL ARTICLE:

I recently discovered a terrible Movable Type artifact that still remains festering and alive within me — via Google Search Returns — six months after I became a Six Apart refugee and gave up my expensive, self-hosted, standalone, blog hosting and returned to my first blogging home: WordPress.com. You can see an example of the problem below in the third search return in the screenshot. That “Memeingful: Search Results” link takes you to a proprietary Movable Type search return that has been dead for six months. Click on that link, and you’ll be taken to a “Not Found” error page on WordPress.com.

After I discovered that first dead search result, I decided to do a few more searches to see if this nightmare was an anomaly or something more widespread and infectious.

My worst contagion concerns were confirmed as I was presented with thousands of pages — across many searches based on my 13 blogs — of bad Movable Type search returns that take you nowhere, but that were still alive within Google:

Those dead links were doing right on my Movable Type blog and then indexed by Google. Google must have found a way to directly index my sites using the search form. That would be great if I were still self-hosted with Movable Type, but now that I’m on WordPress.com, those proprietary Movable Type search results are meaningless.

Here’s an example of what you see when you click though on one of those bad, but very alive, bad Google links culled from my Movable Type blogs:

I did a lot of research to try to figure out how to fix this mess. I discovered you can “remove a single URL” from Google, but the process is more complicated when you need to quickly remove thousands of faulty search returns via a directory deletion.

Just waiting for Google to Re-Index my blogs won’t remove those dead links, because Google uses a Sitemap to follow WordPress.com blogs, so the good links will be reconfirmed, but the bad links stay because the bad links don’t get regularly re-tested by Google and because they aren’t part of my Sitemap. While those dead returns may eventually peel away and decay one day, there’s no set time frame or promise for when, or if, that will happen.

The trick to getting this fixed forever, it seems, is to force the the removal of an entire directory.

In my case, I need this string removed from Google for all my blogs because it leads to all the bad returns. Getting rid of the “cgi-bin” directory should solve my Google problem:

//cgi-bin/mt/mt-search.cgi

Unfortunately, the only way to mass delete a bad directory is to request it via Google Webmaster Tools AND to then enter a “Disallow” line in the robots.txt file for each blog.

Google will not remove a directory via a Webmaster tools request alone — you must also have a robots.txt disallow — because, Google argues, directories can have more “indexible” content other than just web pages.

Unfortunately, WordPress.com does not currently allow users to edit, or add to, their robots.txt file. Here’s the current, universal, robots.txt default for all WordPress.com blogs. I have no idea what — “#har har” — comment means or why it is there:

Sitemap: http://relationshaping.com/sitemap.xml User-agent: IRLbot Crawl-delay: 3600 User-agent: * Disallow: /next/ # har har User-agent: * Disallow: /activate/ User-agent: * Disallow: /signup/ User-agent: * Disallow: /related-tags.php User-agent: * Disallow:

Here’s a screenshot of how Google Webmaster Tools views my current robots.txt file for my Boles Blues blog on WordPress.com:

When I asked WordPress.com support for a solution to this “Thousands of Dead Links” problem, I was told what I already knew: WordPress.com does not allow the editing of robots.txt and, if I wanted to edit robots.txt, I should leave WordPress.com and go to a self-hosted situation.

Since I’ve already paid WordPress.com hundreds of dollars for blog upgrades including “No Ads” and the “CSS Upgrade” and such — so leaving the hosting service is not a viable business option. There has to be a better, simpler, way to fix this problem.

When I first started blogging at WordPress.com — we were not allowed to edit CSS, or add DNS entries or confirm ownership of our blogs with Google Webmaster Tools — but we can now.

My hope is the WordPress.com Gods will realize there are some instances when getting access to our robots.txt file is necessary. Make access a paid upgrade if that helps.

Or, let us only add a disallow to our file that would have to be approved by staff.

Or, better yet, WordPress.com could insert universal “third party search directory disallow entries” in every robots.txt file for all the major blogging platforms that would protect blogging refugees from having dead search entries once they transfer their blog to WordPress.com.

If you had a big blog on Blogger or TypePad or Movable Type, or another platform — and then moved to WordPress.com because of its excellence and convenience — the old, proprietary, blog search returns done on your blog proper have all been indexed by Google, and you likely have hundreds, if not thousands, of dead links stuck in Google you probably didn’t even know existed until now, and that you currently have no way to mass delete.

Having a WordPress.com robots.txt universal directory disallow also covers us across all the search spiders: Google and Yahoo! and Microsoft, etc. Right now, the only way I can find to remove these dead entries is to ask them to be removed, one by one, via Google Webmaster Tools. I’d have to repeat that process for Yahoo! and Microsoft and all the other search indexes if I wanted a universal cleansing of the bad returns.

Here’s the process I’ve been using.

1. Search Google.

2. Click on a dead link.

3. Copy that dead link.

4. Ask for removal via Webmaster Tools.

Each request gets added to a “Pending” queue.

I was able to get —

//cgi-bin/mt/mt-search.cgi

— removed for all my blogs, but that removal did not, as I had hoped, remove all the other entries that build upon that URL because, I guess, I had to request it as a “page removal” rather than as a “directory removal.”

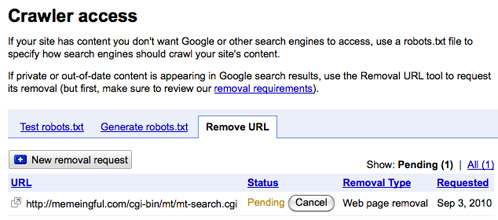

Here’s what my current pending URL removal queue looks like in Google Webmaster Tools for only my RelationShaping.com blog. Each removal request is individually made:

It takes 24 hours for Google to either approve or deny your removal requests.

Here’s the result page for RelationShaping.com. 23 URLs have been removed. 13 requests are still pending. I still have thousands of more individual entries to request for removal across all 13 blogs in the Boles Blogs Network.

I know there are gobs of search returns I’m not seeing when I do my rudimentary Google search checks, and that means I have no certain way to remove the bad links across all the web search services.

Currently, I’m just blindly doing searches on Google and holding thumbs that I find as many dead entries as I can and then pin them inside Webmaster Tools for disembowelment.

I hold hope, as well as thumbs, that WordPress.com might come up with an easy and quick way to solve this problem forever for all refugees that leave other blogging platforms for the protection and preservation found in WordPress.com.

David,

Glad they have found a way to sort out this mess!

Yes, I can’t wait for the fix to go live, Gordon. Then I just need to ask for a single directory removal for each blog on all of the major search engines and we will finally, forever, be done with Movable Type!

Well I sure hope this gets solved for you soon. How complicated. I wouldn’t know where to start or even that there was a problem. Glad you click on your own links to make sure everything is working right.

It was a surprise to discover this problem, Anne, and I do hope that soon it will all be fixed for the better.